This case is described in full detail in How Not to Be Wrong by Jordan Ellenberg.

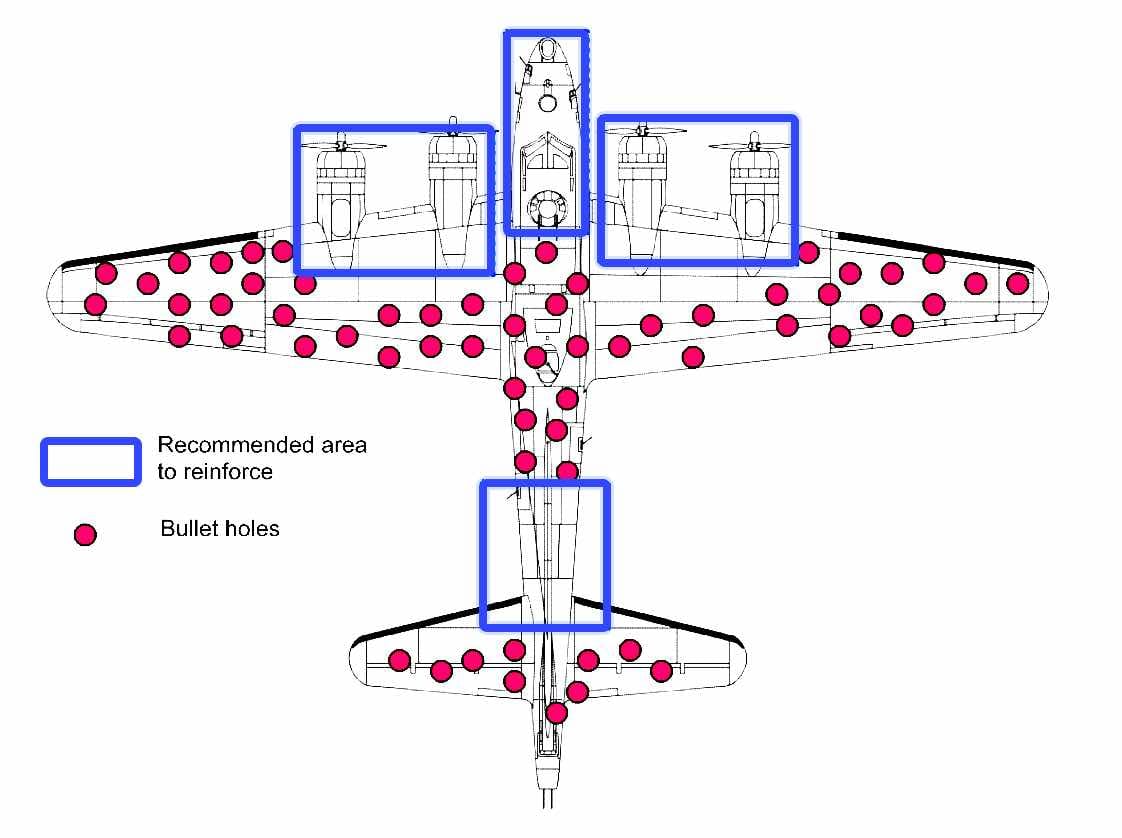

In the middle of World War II, the U.S. military had a problem. A lot of their aircraft weren’t coming back from missions. Those that did return were riddled with bullet holes, some more than others. The military wanted to reinforce the most damaged areas to increase survival rates. They mapped out the bullet holes on returning planes and saw a clear pattern—most of the damage was in the fuselage and wings, while the engines had far fewer hits.

The logical conclusion seemed obvious: armor the parts of the plane that were getting hit the most. If these areas were sustaining the heaviest fire, that must be where reinforcement was most needed.

Abraham Wald, a mathematician with the Statistical Research Group (SRG), saw the mistake in this thinking. His insight? The planes they were analyzing weren’t a complete dataset. These were the lucky ones—the survivors. The ones that didn’t make it back, the ones that were truly lost, were likely hit in the areas where damage was least observed. In other words, the missing data—the planes that never returned—held the key to solving the problem.

Instead of armoring the most visibly damaged areas, the military needed to reinforce the parts that showed the least damage on the returning planes. Why? Because damage in those areas (engines, cockpits, and fuel tanks) meant the planes never made it home in the first place.

This insight changed the way military engineers designed aircraft survivability, and it also gave us one of the clearest examples of survivorship bias—a cognitive trap that affects not just warplanes but decision-making in every domain of life.

Survivorship Bias: Debugging the Thinking

Survivorship bias is related to selection bias, happens when we make conclusions based only on visible, surviving examples, ignoring what didn’t make it through the filter.

It’s a natural human tendency. We celebrate successful entrepreneurs and try to emulate their habits, but we rarely study the thousands of failed startups that followed the exact same strategies. We listen to people who survived terrible medical conditions and attribute their survival to a particular treatment, overlooking those who followed the same course but didn’t make it. We marvel at great leaders who “took risks” without acknowledging the countless others who took similar risks and failed spectacularly.

Wald’s genius wasn’t just in seeing that the planes were coming back damaged in certain areas—it was in realizing that the missing planes were the real dataset. The real question wasn’t "where are the bullet holes?" It was "why aren’t there bullet holes in certain places?" And that’s the question that led to better, data-driven decisions.

The methodology behind Wald’s insight is relevant in complex problem-solving: don’t just look at what’s in front of you—ask what’s missing, for example:

Are we only studying systems that crash, or are we also analyzing systems that degrade silently? Are we training models only on successful outcomes, or are we including failures in our dataset? Are we preparing only for attacks we’ve seen, or are we looking at the threats that go undetected?

A Methodology for a clearer thinking

At its core, a good decision-making requires to consider:

- What am I not seeing?

- Is my dataset complete, or am I only looking at survivors?

- What assumptions am I making that might be flawed?

This way of thinking isn’t just useful in wartime strategy—it’s applicable to everything.